以前、ニューラルネットワークによる文字認識の仕組みを可視化した例をまとめたことがあったけど

こちらはBrendan Bycroft氏による、昨今話題のLLM(大規模言語モデル)の仕組みを各プロセスの解説と共にビジュアルで理解できるWebページ↓

LLM Visualization

Project #2: LLM Visualization

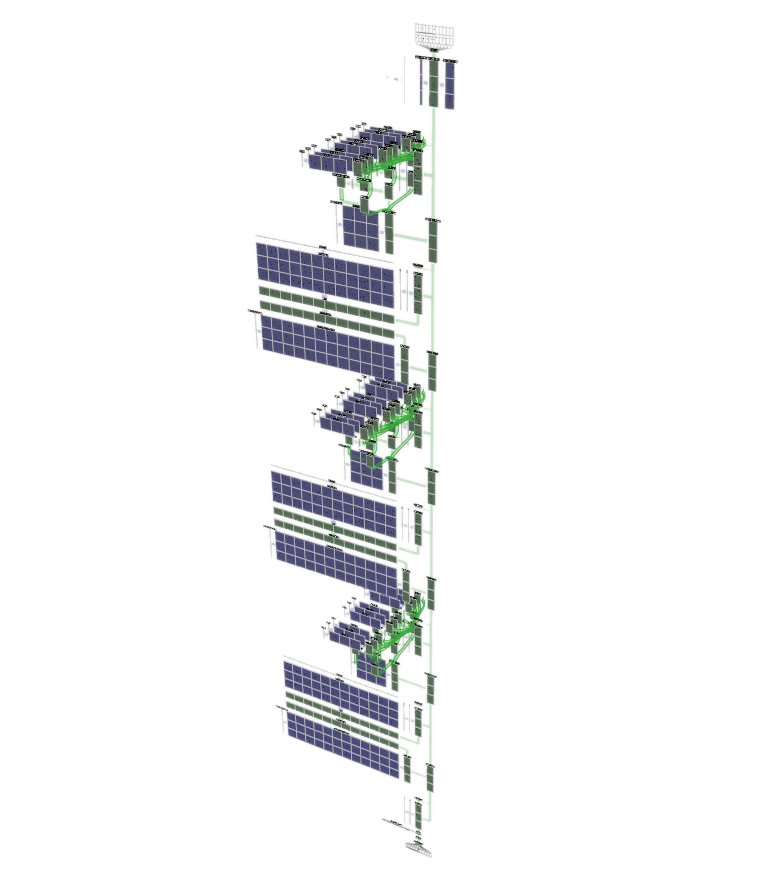

So I created a web-page to visualize a small LLM, of the sort that's behind ChatGPT. Rendered in 3D, it shows all the steps to run a single token inference. (link in bio) pic.twitter.com/nuxHi6cR5n

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

このプロジェクトは、GPT-styleネットワークの実際に動作する実装の3Dモデルを表示します。このネットワーク構造はOpenAIのGPT-2, GPT-3(そしておそらくGPT-4)で使用されているものです。

OpenAIのChatGPTの裏側にあるLLMアルゴリズムを視覚的にウォークスルーできます。加算と乗算に至るまでアルゴリズムを隈なく探索でき、プロセス全体の動作を確認できます。

最初に表示されるネットワーク(nano-gpt)は、小さなGPT-styleネットワークで、文字A、B、Cで構成された小さなリストの並び替えを行います。これはAndrej KarpathyによるminGPT実装デモのサンプルモデルです。

このレンダラーは、任意のサイズのネットワークの可視化もサポートしており、より小さいGPT-2サイズでも動作しますが、重み(数100MBある)はダウンロードされません。

初学者にとってとても良い教材だ。ソースコードもGitHubで公開されている↓

https://github.com/bbycroft/llm-viz

Project #2: LLM Visualization

So I created a web-page to visualize a small LLM, of the sort that's behind ChatGPT. Rendered in 3D, it shows all the steps to run a single token inference. (link in bio) pic.twitter.com/nuxHi6cR5n

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

It also contains a walkthrough/guide of the steps, as well as a few interactive elements to play with.

Why, you ask? For what purpose did I put all the time & effort into this project? pic.twitter.com/nBarLaCyeP

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

There's a real advantage to unpacking a set of abstractions, flattening them out. Abstractions can be useful for terseness and management, but they can be a real blocker to seeing the big picture.

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

With this, you can see the whole thing at once. You can see where the computation takes place, its complexity, and relative sizes of the tensors & weights. pic.twitter.com/8AJq762YVU

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

The model with all the animations is tiiny, to make it tractable. For comparison, I threw in a few of the larger models (GPT-2, GPT-3), render-only.

And when you see what it takes to just produce a single value in a mat-mul, the sheer scale of these things becomes apparent. pic.twitter.com/uOIZml8Nhz

スポンサーリンク

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

(Here's what goes into calculating a _single_ output value of a matrix-multiply) pic.twitter.com/fkYEXyf2l5

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

What about understanding what each layer does? Uhh, sorry, won't be much help.

The project just came out of "Let's build a 3D viz!", so the scope is a bit limited. It's more: here's a way to learn & digest the algorithm, and perhaps think about how to optimize the process.

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

As for what I got out of creating this: before I made it, I mostly knew how image convolution nets worked, but language-based models seemed kinda magical in comparison.

Well, now I know them in a fair amount of detail!

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

I also learnt a good amount of GL (dF/dx, fwidth, ubos, instancing), and animation approaches. So, uhh, even if no-one sees this, the project definitely has some value to me.

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

Oh yeah, the link is here: https://t.co/fc1ihJ3Lac Works best on desktop (sorry mobile). Left-click drag, right-click rotate, scroll to zoom. And hover over the tensor cells. Blue cells are weights/parameters, green cells are intermediate values. Each cell is a single number! pic.twitter.com/cCQMeFqEes

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

Well, I hope you find it interesting. Let me know your thoughts! And if someone makes it through the walkthrough and finds it a little ~incomplete towards the end I might even getting around to fix it (my attention has largely turned to other projects oops)

— Brendan Bycroft (@BrendanBycroft) December 2, 2023

3Blue1Brownと有志が日本語訳している3Blue1BrownJapanでもLLMの解説動画が公開されている↓

スポンサーリンク