何だこれスゲェ!

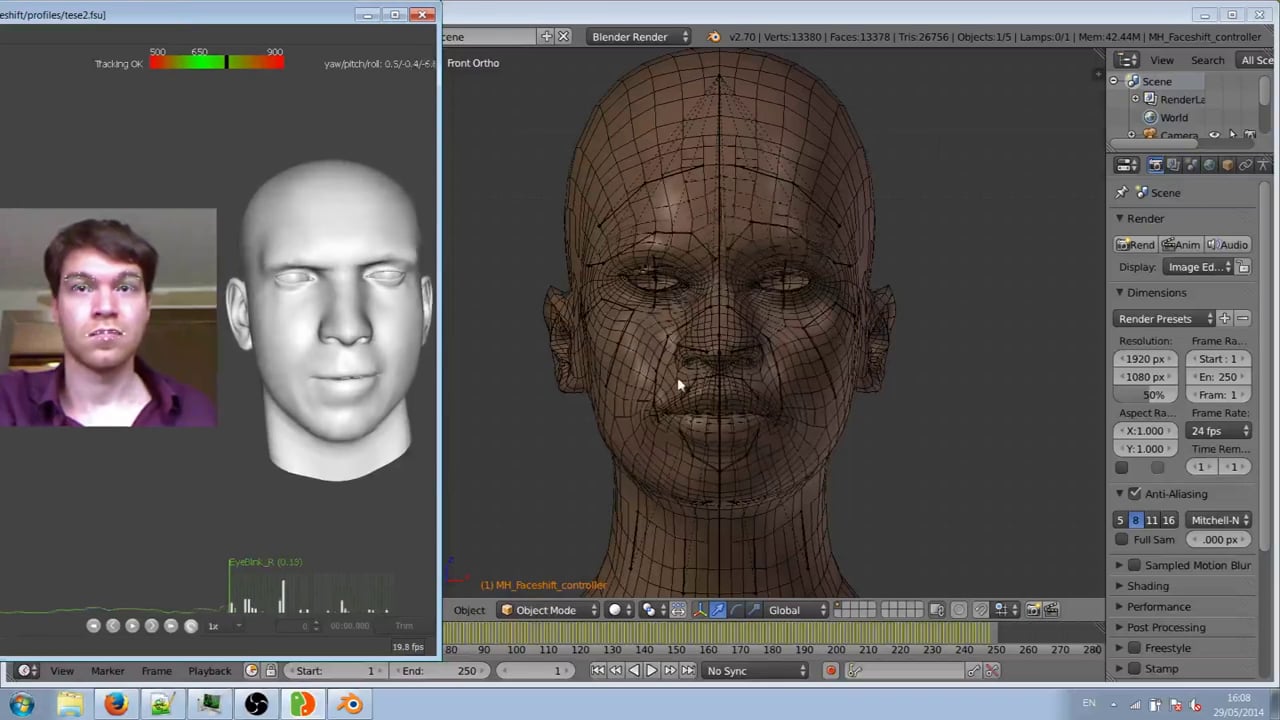

Depthセンサーで表情をキャプチャするFaceShiftっていうツールとMakeHumanの新しいフェイシャルリグを利用して、Blender上で表情のアニメーションを作るデモ動画。

http://vimeo.com/97096194

MakeHumanの新しいフェイシャルリグシステムは、表情変化をブレンドシェイプじゃなくてボーンで制御することで異なる顔にも転送できるようにしてるわけね。

MakeHuman + Faceshift + Blender!

We are porting SLSI’s FaceShift script for Blender to the next version of MakeHuman facial rig.

It was originally written by Sign Language Synthesis and Interaction group at DFKI/MMCI (Saarbrücken, Germany) to work with MakeHuman Alpha 7 and now Jonas Hauquier is modifying it in order to work with the new MakeHuman rigging (still under development).

What you see is a prototype of a script that will be part of the official MHtools Blender scripts.

It illustrates that it is very easy to make the new MakeHuman face rig compatible with FaceShift, and that it will be not very complex to integrate the MH mesh with faceShift in tools like Maya.

It also shows the power of the new face rig in MH, and how portable it is across entirely different human models.

関連記事

ラクガキの立体化 背中の作り込み・手首の移植

AndroidもopenGLも初心者さ (でもJavaは知っ...

Blendify:コンピュータービジョン向けBlenderラ...

Unityからkonashiをコントロールする

Mayaでリアルな布の質感を作るチュートリアル

物理ベースレンダリングのためのマテリアル設定チートシート

UnityでLight Shaftを表現する

adskShaderSDK

MFnMeshクラスのsplit関数

ZBrushでアヴァン・ガメラを作ってみる 頭頂部の作り込み...

BlenderProc:Blenderで機械学習用の画像デー...

Raytracing Wiki

ZBrushでアヴァン・ガメラを作ってみる 口内の微調整・身...

ZBrushでゴジラ2001を作ってみる 身体のアタリを作る

スターウォーズ エピソードVIIの予告編

Ambient Occlusionを解析的に求める

ZBrushでアヴァン・ガメラを作ってみる 歯を配置

konashiのサンプルコードを動かしてみた

OpenCV 3.1とopencv_contribモジュール...

Arduinoで作るダンボーみたいなロボット『ピッコロボ』

Phongの表現力パネェ 材質別のPhong Shader用...

ちょっと凝り過ぎなWebキャンペーン:全日本バーベイタム選手...

Subsurface scatteringの動画

参考書

CGWORLD CHANNEL 第21回ニコ生配信は『シン・...

Faster R-CNN:ディープラーニングによる一般物体検...

Siggraph Asia 2009 カンファレンスの詳細

ZBrushCoreのTransposeとGizmo 3D

「ベンジャミン·バトン数奇な人生」でどうやってCGの顔を作っ...

Google Earth用の建物を簡単に作れるツール Goo...

libigl:軽量なジオメトリ処理ライブラリ

3Dモデルを立体視で確認できるVRアプリを作っている

CreativeCOW.net

OpenCVのfindEssentialMat関数を使ったサ...

SIGGRAPH論文へのリンクサイト

Leap MotionでMaya上のオブジェクトを操作できる...

Kinect for Windows v2の日本価格決定

OpenGVのライブラリ構成

素敵なパーティクル

Texturing & Modeling A Pro...

iPhone 3GSがますます欲しくなる動画

DUSt3R:3Dコンピュータービジョンの基盤モデル

コメント